Oppressive Code

Digital tool, you presume as a transparent and democratising “disrupters”, but as currently deployed algorithms pose a major threat to the human rights of marginalised groups.

Digital tool, you presume as a transparent and democratising “disrupters”, but as currently deployed algorithms pose a major threat to the human rights of marginalised groups.

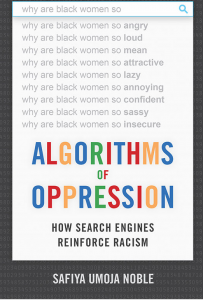

Safiya Umoja Noble, an assistant professor of information studies at the University of California, Los Angeles, pens her experience in her latest book Algorithms of Oppression. Noble used Google and searched for “black girls” in order to entertain her pre-teen stepdaughter and visiting nieces. The search result shocked her as the phrase was meant to elicit information on things that might be of interest to their demographic, instead it produced a page result awash with pornography. “This top hit and best information, as listed by rank in the search results, was certainly not the best information for me or for the children I love.” Noble questions Google’s abdication of responsibility for the result its Algorithms produced. Noble also acknowledges that it is easy to forget that Google is not a public information resource but a multinational advertising company. The basis of Google Search is rooted in the “citations of the internet users. Google is more of a “supermarket of ideas” than the allegorical marketplace regarded as a vital component of democracy.

Like supermarket the visual mesmerisation, the most eye-catching placed items are not necessarily displayed because they are the best quality and value, but because of commercial interests and economic clout of the retailer and producers.

The Google Search reflects just how accurately and quickly its algorithms can find information we want, but in Algorithms of Oppression we are confronted with the supermarket of information in which the aisle of “black girls”, we may get eye level pornography and also on a shelf of “professors” displays a row after row of white males. Black teenage boys are found next to criminal background check products, a stack of white supremacist “statistics” about “Black on white crimes”, obscures accurate government sources and statistics.

Noble asks us to spare a thought about the implications of our ever greater reliance on advertising companies “for information about people, culture, ideas and individuals”.

Who benefits automation inequality or use of automation and algorithms by public service agencies in the US, exposes the digital “expansion and continuation of moralistic and punitive poverty management strategies that have been with us since the 1820s.

Noble exposes how double standards can be embedded in, and exacerbated by automation. In Indiana, an automated benefits systems that categorised even its own frequent errors as “failure to cooperate” denied a million welfare applications over three years, and even wrongful denials of food stamps soared from 1.5 per cent to 12.2 per cent. In the interest of efficiency and fraud reduction, automation replaced caseworkers who would otherwise exercise compassion and wisdom on a case by case basis in helping vulnerable people navigate the complexities of poverty, illness, unemployment and bereavement.

In Los Angeles, 50, 000 LA residents remain unhoused who provided intimate and sensitive information to a database which can be accessed by 168 different organisations and even the police can access this data without a warrant, as the system equates poverty and homelessness with criminality.

Meanwhile, the house LA residents who enjoy mortgage tax deductions do not have their personal information scrutinised or made available to law enforcement without a warrant.

When the poor reach out for public assistance, the model adds this information to its store of statistical suspicion. On the other hand, the middle class can get help from babysitters, therapists and private drug and alcohol rehabilitation centres without coming under statistical scrutiny. Calls to abuse hotline often triggered by racial and class prejudices or personal vendettas are indefinitely stored as input.

Noble recommends that technology companies employ more people educated in humanities to create a more effective approach to achieving a greater diversity of leadership. Noble finally pulls back the curtain on digital tools of power and privilege, and ask us if we care enough to do anything about it. We will not sort out social inequality by lying in bed staring at smartphones.

The power of algorithms in the age of neoliberalism and the way those digital decisions reinforce oppressive social relationships and enact new models of racial profiling and technological redlining.

Algorithms of Oppression: How Search Engines Reinforce Racism by Safiya Umoja Noble, NYU Press £21.99/ $28, 256 pages.